Estimates: A Necessary Evil

Despite being an age old problem in the IT industry (and presumably a problem in other industries) it still concerns me how we have to rely so much on estimates to manage resources on complex multi-million pound projects. We call them estimates to hide the truth that they are at best educated guesses, or at worst complete fantasy. In the same way that fortune tellers can use clues to determine a punters history and status (e.g. their clothes, watch, absence of wedding ring etc) we as estimators will naturally seek out clues as to the nature of a potential project. Have we dealt with this business function before, is their strategy clear? Will we get clear requirements in time? We then naturally use these clues to plan out the project in our head and load our estimates accordingly but it’s hard to avoid Hofstadter’s Law which states that:

“It always takes longer than you expect, even when you take into account Hofstadter’s Law.”

— Douglas Hofstadter, Gödel, Escher, Bach: An Eternal Golden Braid[1]

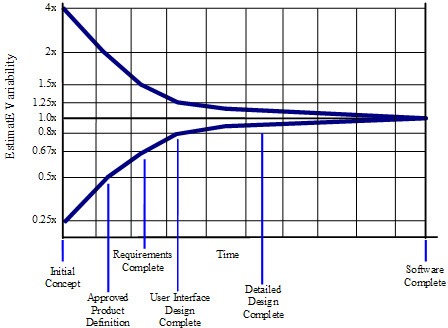

We are daily asked to glare into our crystal balls and come up with an accurate prediction of how long something will take based on very little requirements or even context. How far out are these estimates likely to be in this situation? Well the boffins at NASA can help here with their Cone of Uncertainty

The cone of uncertainty is excellent at visually displaying the evolution of uncertainty on a project. Based on research by NASA it shows that at the start of a project the estimates could be out by as much as 4x. Whilst this reduces as the work progresses and therefore more is known about the project, it is often at the very early stage where estimates are collected and used as a basis for a business case or to acquiring resources. This is despite the fact that it is known at this point that they are significantly inaccurate.

Estimating is hard and by its nature inaccurate but that is not surprising considering the human nature aspects we have to deal with. These are excellently outlined in this post and they include our strong desire to please and “The Student Syndrome” (whereby we tend to put off until later what we could do now). The post compares overestimation and underestimation highlighting that the effects of underestimating are far worse than overestimating, and concludes…

“Never intentionally underestimate. The penalty for underestimation is more severe than the penalty for overestimation. Address concerns about overestimation through control, tracking and mentoring but not by bias.”

So underestimating is bad, shame then that we have the concept of the “Planning Fallacy” based on research by Daniel Kahneman and Amos Tversky which highlights a natural…

“tendency for people and organizations to underestimate how long they will need to complete a task, even when they have experience of similar tasks over-running.”

There are many explanations of the results of this research but interestingly it showed that it …

“only affects predictions about one’s own tasks; when uninvolved observers predict task completion times, they show a pessimistic bias, overestimating the time taken.”

…which has implications for the estimating process and conflicts with the sensible thoughts of many (including Joel on software) on this subject that dictate that the estimate must be made by the person doing the work. It makes sense to ask the person doing the work how long it will take and it certainly enables them to raise issues such as a lack of experience with a technology but this research highlights that they may well still underestimate it.

In many corporate cultures it is no doubt much safer to overestimate work than to underestimate it. the consequences of this over time however can result in organisations where large development estimates become the norm and nothing but mandatory project work is authorised. This not only stifles innovation but also makes alternative options more attractive to the business, such as fulfilling IT resource requirements externally via 3rd parties (e.g. outsourcing/offshoring).

The pace of technological change also fights against our estimating skills. The industry itself is still very young and rapidly changing around us. This changing landscape makes it very difficult to find best practice and make it repeatable. As technologies change so does the developers uncertainty of estimating. For example, a developer coding C++ for 5 years was probably starting to make good estimates for the systems on which he worked, but he might move to .Net and his estimating accuracy is set back a few years - not due to the technology but just his familiarity with it. It’s the same for Architects, System Admins and Network professionals too. As an industry we are continuously seeking out the next holy grail, the next magic bullet and yet we are not taking the time to train our new starters or to grow valid standards/certifications in order to grow as an industry. This was a challenge that many professions in history have had to face up to and overcome (e.g. the early medical profession, structural architectures, surveyors, accountants etc) but that’s a post for another day.

Ok, ok so estimates are evil, can we just do without them? Well one organisation apparently seems to manage. According to some reports…

“Google isn’t foolish enough or presumptuous enough to claim to know how long stuff should take.”

…and therefore avoids date-driven development, with projects instead working at optimum productivity without a target date in mind. That’s not to say that everything doesn’t need to be done as fast as possible it just means they don’t estimate what “fast as possible” means at project start. By encouraging a highly productive and creative culture and avoiding publically announcing launch dates Google is able to build amazing things quickly in the time it takes to build them, and they are not bound by an arbitrary project deadline based on someone’s ‘estimate’. It seems to work for them. Whether this is true or not in reality it makes for an interesting thought. The necessity for estimates comes from the way projects are run and how organisations are structured and they do little to aid engineers in the process that is software development.

So why do we cling to estimates? Well unless your organisation is prepared to radically change its organisational culture then they are without doubt a necessary evil that whilst not perfect are a mandatory element of IT projects. The key therefore is to improve the accuracy of our estimating process one estimate at a time, whilst still reminding our colleagues that they are only estimates and by their nature they are wrong.

Agile estimating methods include techniques like Planning Poker (LINK) which simplify the estimating process down to degrees of complexity and whilst they can be very successful they are still relying on producing an estimate of sorts, even if they just classify them by magnitude of effort. I was just this week chatting to a PM on a large agile project who was frustrated by the talented developments team’s inability to hit their deadlines purely as a result of their poor estimating.

There are many suggested ways to improve the process and help with estimate accuracy and I’m not going to cover them all here, but regardless of the techniques that you use don’t waste the valuable resource that is your ‘previous’ estimates. Historic estimates when combined with real metrics of how long that work actually took are invaluable to the process of improving your future estimates.

Consistency is important to enable a project to be compared with previously completed one. By using a template or check-sheet you ensure that all factors are considered and recorded, and avoid mistakes being made from forgetting to include items. Having an itemised estimate in a consistent format enables estimates to be compared easily to provide a quick sanity test (e.g: “Why is project X so much more than project Y?”). It also allows you to capture and evolve the process over time as you add new items to your template or checklist as you find them relevant to the process. Metrics, such as those provided by good ALM tools (e.g. Team Foundation Server or Rational Team Concert) are useful for many things but especially for feeding back into the estimating process. By knowing how long something actually took to build you can accurately predict how long it will take to build something similar.

In summary then, estimates by their nature are wrong and whilst a necessary evil of modern organisations are notoriously difficult for us mere humans to get right. Hopefully this post has made you think about estimates a little more and reminded you to treat them with the care that they deserve in your future projects.